- Blog

4 Reasons Your Organization Doesn’t Trust Artificial Intelligence

Chances are your organization doesn’t trust AI systems to support business-critical decisions. Why not? In a nutshell, today’s stock of machine learning systems are not trustworthy. Causal AI is the only technology that reasons about the world like humans do — through cause-and-effect relationships, imagining counterfactuals, and creating optimal interventions. We set out why humans can trust Causal AI with their most important business decisions and challenges.

What is Trustworthy AI?

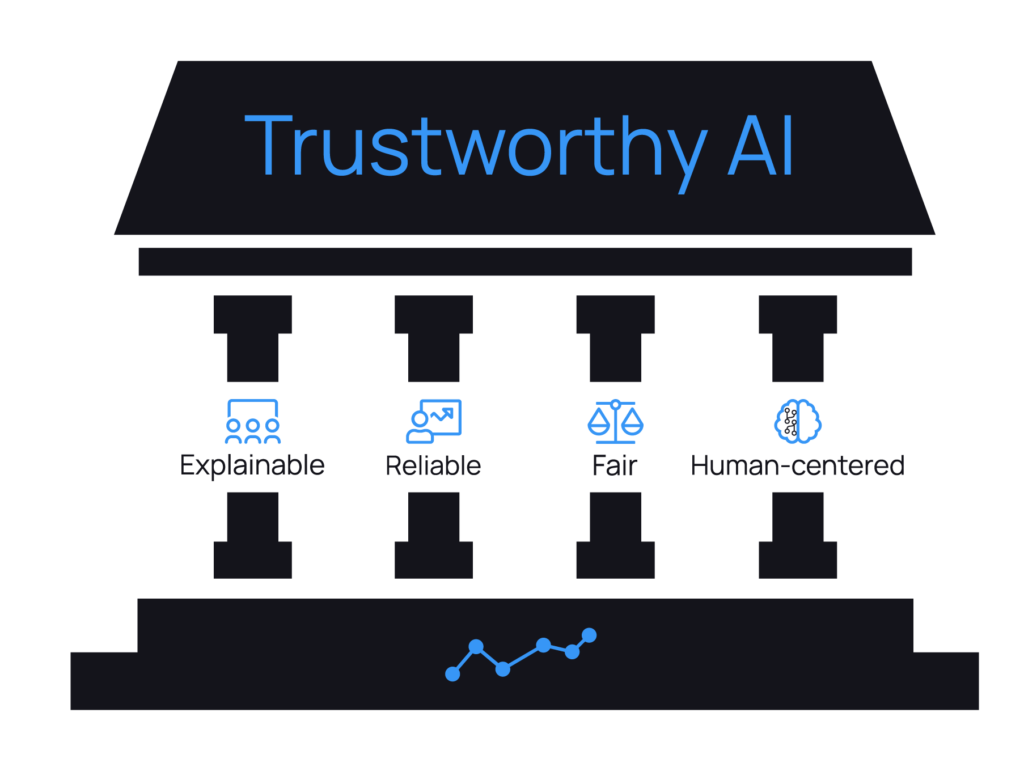

Businesses need AI systems that they can trust sufficiently to adopt and responsibly use. Trustworthy AI rests on four key pillars.

- Explainability: Models must be comprehensible to human users and data subjects — you can’t trust AI systems you can’t understand.

- Reliability: AI should behave as expected and produce solid results, in the field and in changeable conditions.

- Fairness: The model’s assumptions and decisions should be consistent with ethics, regulations, and discrimination law to garner trust.

- Human-centeredness: AI systems should incorporate and augment human intelligence, and their objectives should be aligned with human interests.

Why is Trustworthy AI important?

As AI plays an increasing role in society and business, it’s critical that humans trust machines — it may be the most important bilateral relationship of our era.

Trust is top of the AI agenda for governments. It’s the cornerstone of the OECD’s AI Principles, which 42 governments have signed up to and the key concept in the EU’s AI ethics regulations.

But trustworthy AI isn’t only a compliance issue. Businesses that foster trusting human-machine partnerships are able to adopt and scale AI more effectively, and have a massively improved chance of reaping the biggest financial benefits of AI.

Absent trustworthy AI, businesses end up not deploying the technology at all — machine learning remains confined to innovation labs and used as a marketing tool. Or, worse than this, untrustworthy AI systems are a liability, posing potentially existential risks to enterprises that deploy them.

This is the sorry state of play for the vast majority of organizations that are experimenting with AI. AI has a trust crisis.

Why does AI have a trust crisis?

This trust crisis isn’t just an AI public relations problem. Correlation-based machine learning technology, the most widely used form of AI, is itself untrustworthy.

Businesses are frustrated by AI that routinely fails during periods of market disruption, as it did during COVID-19. They are mistrustful of complex “black box” models, that even tech developers can’t understand and which can’t be audited by regulators or business users. And enterprises are wary of the reputation and regulatory risk posed by AI bias — which is an increasingly widespread problem.

What makes Causal AI trustworthy?

Next-generation Causal AI strengthens all four pillars of trustworthy AI, helping enterprises to leap over the AI trust barrier:

- Explainable AI

Causal AI can explain why it made a decision in human-friendly terms. Because causal models are intrinsically interpretable, these explanations are highly reliable. And the assumptions behind the model can be scrutinized and corrected by humans before the model is fully developed. Transparency and auditability are essential conditions for establishing trust.

“84% of CEOs agree that AI-based decision need to be explainable to be trusted”

PwC CEO survey

Find out more about how Causal AI promotes explainability here.

- Fair AI

Machine learning assumes the future will be very similar to the past — this can lead to algorithms perpetuating historical injustices. Causal AI can envision futures that are decoupled from historical data, enabling users to eliminate biases in input data. Causal AI also empowers domain experts to impose fairness constraints before models are deployed and increases confidence that algorithms are fair.

“Causal models have a unique combination of capabilities that could allow us to better align decision systems with society’s values.”

DeepMind

Learn about how Causal AI promotes algorithmic fairness here.

- Reliable AI

For AI to be trustworthy it must be reliable, even as the world changes. Conventional machine learning models break down in the real world, because they wrongly assume the superficial correlations in today’s data will still hold in the future. Causal AI discovers invariant relationships that tend to hold over time, and so it can be relied on as the world changes.

“Generalizing well out the [training] setting requires learning not mere statistical associations between variables, but an underlying causal model.”

Google Research and the Montreal Institute for Learning Algorithms

Read our white paper showing how Causal AI adapts faster than conventional algorithms as conditions change.

- Human-centered AI

The final pillar of trustworthy AI — perhaps the keystone piece — is that it must be complementary to human objectives and intelligence. Causal AI is a human-in-the-loop technology, enabling users to communicate their expertise and business context to the AI, shaping the way the algorithm “thinks” via a shared language of causality.

“We can construct robots that learn to communicate in our mother tongue — the language of cause and effect.”

Judea Pearl, AI pioneer

Check out our article setting out how Causal AI opens up new and improved communication channels between experts and AI.

Give me an example of Trustworthy AI!

Let’s take a bank using Causal AI to assist with credit decisioning.

Human-plus-machine intelligence combines to build an intuitive causal model of the business environment, describing the key causal drivers of default risk. These models are robust to both small fluctuations in macroeconomic conditions and big disruptions like COVID-19, thanks to the elimination of spurious correlations.

Fairness is in the DNA of the model. Causal AI can eliminate any inappropriate influence that protected characteristics (such as race, gender or religion) may have on the credit decision. And the bank’s stakeholders can constrain the model to align with their intuitions about fair lending practices.

Risk analysts, and regulators can explain, probe and stress-test the model — examining the model’s macro-level assumptions and zooming in on individual loan applicants. Business stakeholders can run scenario analyses — looking at how economic crises or shifts in monetary policy will impact the loan portfolio or change consumer demand. Dispute resolution is automatic, with consumers given explanations as to why their loan was rejected along with practical steps the applicant can take to get an approval.

The bank can rest safe in the knowledge that Causal AI anticipates all incoming regulations in financial services — from Singapore’s FEAT principles to the European Approach to AI proposal and the Algorithmic Accountability Act in the US.

Trust is hard to gain but easy to lose. causaLens provides Trustworthy AI for the enterprise. Causal AI inspires the trust needed for users to actually leverage AI in their organizations, unlocking the enormous potential of AI. Learn more about the science of the possible from our knowledge base.